Web scraping can be done in virtually any programming language that has support for HTTP and XML or DOM parsing. In this tutorial, we will focus on web scraping using JavaScript in a Node.js server environment. With that in mind, this tutorial assumes that readers know the following: Understanding of JavaScript and ES6 and ES7 syntax. Thanks to Node.js, JavaScript is a great language to u se for a web scraper: not only is Node fast, but you’ll likely end up using a lot of the same methods you’re used to from querying the DOM.

Following up on my popular tutorial on how to create an easy web crawler in Node.js I decided to extend the idea a bit further by scraping a few popular websites. For now, I'll just append the results of web scraping to a .txt file, but in a future post I'll show you how to insert them into a database.

Web scraping isn’t new. However, the technologies and techniques that are used to power websites are developing at a rapid speed. A lot of websites use front-end frameworks like Vuejs, react, or angular, that load (partial) content asynchronously via javascript. Hence, this content can only be fetched if the page is opened in a web browser. Learn how to build a web scraper ⛏️ with NodeJS using two distinct strategies, including (1) a metatag link preview generator and (2) a fully-interactive bot.

Simple Web Scraping With Javascript May 1, 2018. Sometimes you need to scrape content from a website and a fancy scraping setup would be overkill. Maybe you only need to extract a list of items on a single page, for example. In these cases you can just manipulate the.

Each scraper takes about 20 lines of code and they're pretty easy to modify if you want to scrape other elements of the site or web page.

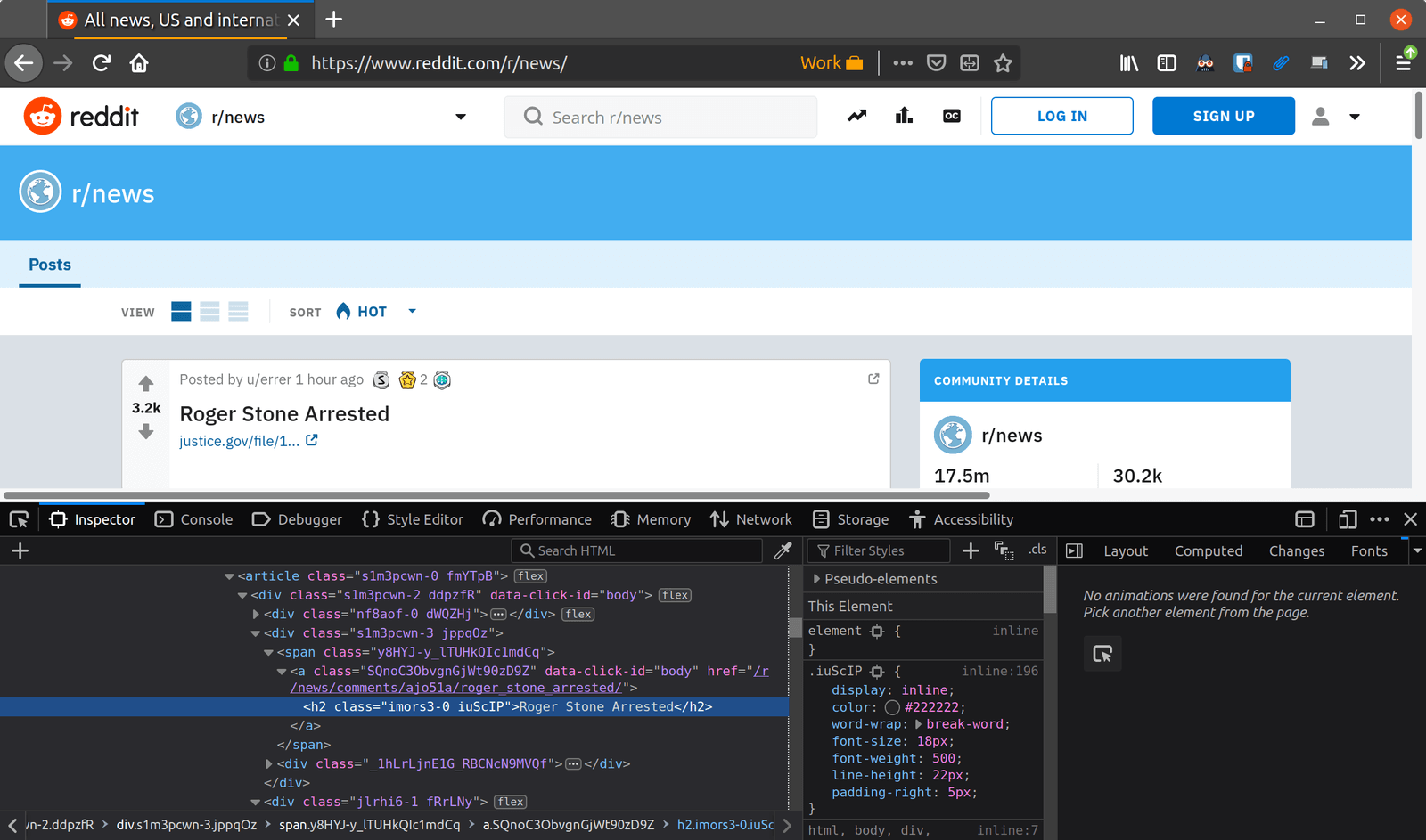

Web Scraping Reddit

First I'll show you what it does and then explain it.

It firsts visits reddit.com and then collects all the post titles, the score, and the username of the user that submitted each post. It writes all of this to a .txt file named reddit.txt separating each entry on a new line. Alternatively it's easy to separate each entry with a comma or some other delimiter if you wanted to open the results in Excel or a spreadsheet.

Okay, so how did I do it?

Make sure you have Node.js and npm installed. If you're not familiar with them take a look at the paragraph here.

Open up your command line. You'll need to install just two Node.js dependencies. You can do that by either running

as shown below:

Alternate option to install dependencies

Another option is copying over the dependencies and adding them to a package.json file and then running npm install. My package.json includes these:

The actual code to scrape reddit

Now to take a look at how I scraped reddit in about 20 lines of code. Open up your favorite text editor (I use Atom) and copy the following:

This is surprisingly simple. Save the file as scrape-reddit.js and then run it by typing node scrape-reddit.js. You should end up with a text file called reddit.txt that looks something like:

which is the post title, then the score, and finally the username.

Web Scraping Js

Web Scraping Hacker News

Let's take a look at how the posts are structured:

As you can see, there are a bunch of tr HTML elements with a class of athing. So the first step will be to gather up all of the tr.athing elements.

We'll then want to grab the post titles by selecting the td.title child element and then the a element (the anchor tag of the hyperlink).

Note that we skip over any hiring posts by making sure we only gather up the tr.athing elements that have a td.votelinks child, as demonstrated in the following picture:

Here's the code

Run that and you'll get a hackernews.txt file that looks something like:

Web Scraping Node Js

First you have the title of the post on Hacker News and then the URL of that post on the next line. If you wanted both the title and URL on the same line, you can change the code:

to something like:

This allows you to use a comma as a delimiter so you can open up the file in a spreadsheet like Excel or a different program. You may want to use a different delimiter, such as a semicolon, which is an easy change above.

Web Scraping BuzzFeed

Run that and you'll get something like the following in a buzzfeed.txt file:

Want more?

I'll eventually update this post to explain how the web scraper works. Specifically I'll talk about how I chose the selectors to pull the correct content from the right HTML element. There are great tools that make this process very easy, such as Chrome DevTools that I use while I'm writing the web scraper for the first time.

I'll also show you how to iterate through the pages on each website to scrape even more content.

Finally, in a future post I'll detail how to insert these records into a database instead of a .txt file. Be sure to check back!

In the mean time, you may be interested in my tutorial on how to create a web crawler in Node.js / JavaScript.